Scrapy is an open source framework developed in Python that allows you to create web spiders or crawlers for extracting information from websites quickly and easily.

This guide shows how to create and run a web spider with Scrapy on your server for extracting information from web pages through the use of different techniques.

First, connect to your server via an SSH connection. If you haven’t done so yet, following our guide is recommended to connect securely with SSH. In case of a local server, go to the next step and open the terminal of your server.

Creating the virtual environment

Before starting the actual installation, proceed by updating the system packages:

Continue by installing some dependencies necessary for operation:

$ sudo apt-get install python-dev python-pip libxml2-dev zlib1g-dev libxslt1-dev libffi-dev libssl-dev

Once the installation is completed, you can start configuring virtualenv, a Python package that allows you to install Python packages in an isolated way, without compromising other softwares. Although being optional, this step is highly recommended by Scrapy developers:

$ sudo pip install virtualenv

Then, prepare a directory to install the Scrapy environment:

$ sudo mkdir /var/scrapy

$ cd /var/scrapy

And initialize a virtual environment:

$ sudo virtualenv /var/scrapy

New python executable in /var/scrapy/bin/python

Installing setuptools, pip, wheel...

done.

To activate the virtual environment, just run the following command:

$ sudo source /var/scrapy/bin/activate

You can exit at any time through the "deactivate" command.

Installation of Scrapy

Now, install Scrapy and create a new project:

$ sudo pip install Scrapy

$ sudo scrapy startproject example

$ cd example

In the newly created project directory, the file will have the following structure :

example/

scrapy.cfg # configuration file

example/ # module of python project

__init__.py

items.py

middlewares.py

pipelines.py

settings.py # project settings

spiders/

__init__.py

Using the shell for testing purposes

Scrapy allows you to download the HTML content of web pages and extrapolate information from them through the use of different techniques, such as css selectors. To facilitate this process, Scrapy provides a "shell" in order to test information extraction in real time.

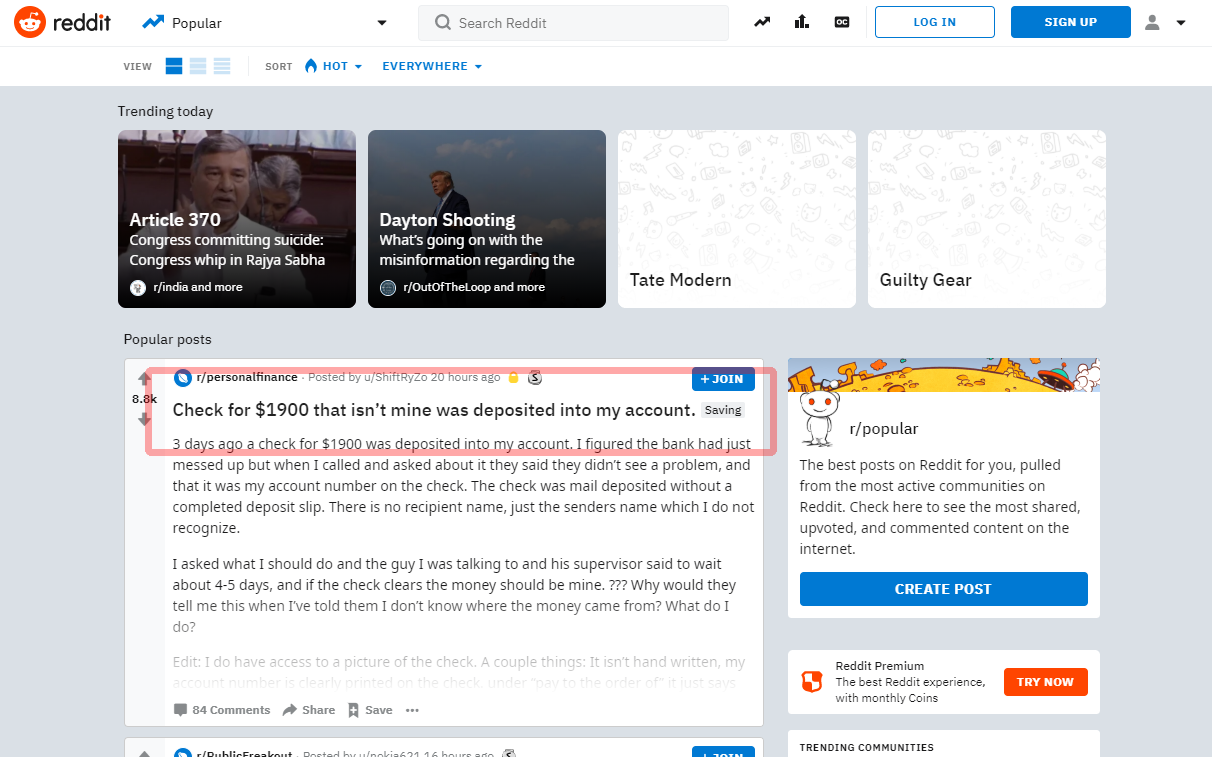

In this tutorial, you will see how to capture the first post on the main page of the famous social Reddit:

Before moving on to the writing of the source, try to extract the title through the shell:

$ sudo scrapy shell "reddit.com"

Within a few seconds, Scrapy will have downloaded the main page. So, enter commands using the ‘response’ object. As, in the following example, use the selector "article h3 :: text":, to get the title of the first post

>>> response.css('article h3::text')[0].get()

If the selector works properly, the title will be displayed. Then, exit the shell:

To create a new spider, create a new Python file in the project directory example / spiders / reddit.py:

import scrapy

class RedditSpider(scrapy.Spider):

name = "reddit"

def start_requests(self):

yield scrapy.Request(url="https://www.reddit.com/", callback=self.parseHome)

def parseHome(self, response):

headline = response.css('article h3::text')[0].get()

with open( 'popular.list', 'ab' ) as popular_file:

popular_file.write( headline + "\n" )

All spiders inherit the Spider class of the Scrapy module and start the requests by using the start_requests method:

yield scrapy.Request(url="https://www.reddit.com/", callback=self.parseHome)

When Scrapy has completed loading the page, it will call the callback function (self.parseHome).

Having the response object, the content of your interest can be taken :

headline = response.css('article h3::text')[0].get()

And, for demonstration purposes, save it in a "popular.list" file.

Start the newly created spider using the command:

$ sudo scrapy crawl reddit

Once completed, the last extracted title will be found in the popular.list file:

Scheduling Scrapyd

To schedule the execution of your spiders, use the service offered by Scrapy "Scrapy Cloud" (see https://scrapinghub.com/scrapy-cloud ) or install the open source daemon directly on your server.

Make sure you are in the previously created virtual environment and install the scrapyd package through pip:

$ cd /var/scrapy/

$ sudo source /var/scrapy/bin/activate

$ sudo pip install scrapyd

Once the installation is completed, prepare a service by creating the /etc/systemd/system/scrapyd.service file with the following content:

[Unit]

Description=Scrapy Daemon

[Service]

ExecStart=/var/scrapy/bin/scrapyd

Save the newly created file and start the service via:

$ sudo systemctl start scrapyd

Now, the daemon is configured and ready to accept new spiders.

To deploy your example spider, you need to use a tool, called ‘scrapyd-client’, provided by Scrapy. Proceed with its installation via pip:

$ sudo pip install scrapyd-client

Continue by editing the scrapy.cfg file and setting the deployment data:

[settings]

default = example.settings

[deploy]

url = http://localhost:6800/

project = example

Now, deploy by simply running the command:

To schedule the spider execution, just call the scrapyd API:

$ sudo curl http://localhost:6800/schedule.json -d project=example -d spider=reddit