Introduction

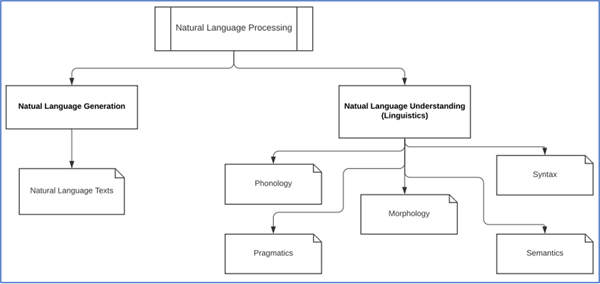

Natural Language Processing (NLP) is a method within Artificial Intelligence (AI) that works with analysis and building of intelligent systems that can function languages that humans speak, for example, English. Processing of language is needed when a system wants to work based on input from a user in the form of text or speech and the user is adding input in regular use English.

Natural Language Understanding (NLU): The understanding phase of the processing is responsible for mapping the input that is given in natural language to a beneficial representation. It also analyzes different aspects of the input language that is given to the program.

Natural Language Generation (NLG): The generation phase of the processing is used in creating Natural Languages from the first phase. Generation starts with Text Planning, that is the extraction of relevant content from the base of knowledge. Next is the Sentence Planning phase where required words that will form the sentence are chosen. Ending with Text Realization is the final creation of the sentence structure.

Challenges in Natural Language Processing

- Lexical Ambiguity: This is the first level ambiguity that occurs generally in words alone. For instance, when a code is given a word like ‘board’ it would not know whether to take it as a noun or a verb. This causes ambiguity in the processing of this piece of code.

- Syntax Level Ambiguity: This is another type of ambiguity that has more to do with the way phrases sound in comparison to how the machine perceives it. For instance, a sentence like, ‘He raised the scuttle with a blue cap’. This could mean one of two things. Either he raised a scuttle with the help of a blue cap, or he raised a scuttle that had a red cap.

- Referential Ambiguity: References made using pronouns constitute of referential ambiguity. For instance, two girls are running on the track. Suddenly, she says, ‘I am exhausted’. It is not possible for the program to interpret, who out of the two girls is tired.

Terminology and the Science behind Human Cognition

Language Processing as an AI technique is new and rapidly growing. There are a few terms that are distinctly associated only with the processing of NLP data. Let us look at these terms to understand NLP better.

- Phonology: The systematic organization of sounds is called phonology.

- Morphology: The construction of new words from a given set of primitive and meaningful units is called morphology in NLP.

- Morpheme: It is the singular primitive unit that Morphology uses.

- Semantics: As in programming languages, semantics deals with the meaningful construction of sentences using the provided words.

- Syntax: The general meaning is to arrange words in a way that it forms a sentence. Additionally, syntax also plays an important role in determining the role of words in forming a sentence’s structure.

- World Knowledge: NLP algorithms needs extensive general knowledge of their surroundings to build meaningful sentences.

- Pragmatics: Different situations might require sentences to be especially tuned. Pragmatics deals with this part of sentence formation for typical scenarios.

- Discourse: It works on sentence sequencing. Specifically, discourse tries to understand how a sentence formation will affect the next sentence.

There are a total of 4 execution steps when building a Natural Language Processor:

- Lexical Analysis:: Processing of Natural Languages by the NLP algorithm starts with identification and analysis of the input words’ structure. This part is called Lexical Analysis and Lexicon stands for an anthology of the various words and phrases used in a language. It is dividing a large chunk of words into structural paragraphs and sentences.

- Syntactic Analysis / Parsing: Once the sentences’ structure if formed, syntactic analysis works on checking the grammar of the formed sentences and phrases. It also forms a relationship among words and eliminates logically incorrect sentences. For instance, the English Language analyzer rejects the sentence, ‘An umbrella opens a man’.

- Semantic Analysis: In the semantic analysis process, the input text is now checked for meaning, i.e., it draws the exact dictionary of all the words present in the sentence and subsequently checks every word and phrase for meaningfulness. This is done by understanding the task at hand and correlating it with the semantic analyzer. For example, a phrase like ‘hot ice’ is rejected.

- Discourse Integration: The discourse integration step forms the story of the sentence. Every sentence should have a relationship with its preceding and succeeding sentences. These relationships are checked by Discourse Integration.

- Pragmatic Analysis: Once all grammatical and syntactic checks are complete, the sentences are now checked for their relevance in the real world. During Pragmatic Analysis, every sentence is revisited and evaluated once again, this time checking them for their applicability in the real world using general knowledge.

Getting Started with NLTK

For languages to work fluently, the most important requirement is context. Even for humans, until we do not have the correct context of what is being talked about, we will not be able to function with conversations. To build this context in machines, we make use of the Natural Language Processing Toolkit (NLTK) in Python. This helps the machine understand context the same way humans do. Let us walkthrough a few basics of NLTK.

# Installing the NLTK package

pip install nltk

>>> Note: you may need to restart the kernel to use updated packages.

import nltk

# Gensim is a python package that has a robust structure of semantic modelling.

pip install gensim

# Pattern is another package that helps gensim work accurately.

pip install pattern

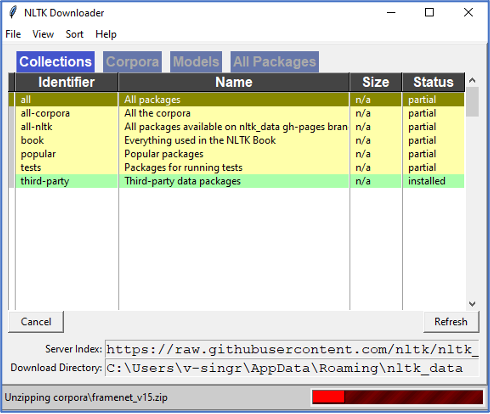

# NLTK has a large amount of prebuilt data that helps during the processing of human languages. Whenever we are in a working session, it is advised to download all of this data.

nltk.download()

>>> showing info https://raw.githubusercontent.com/nltk/nltk_data/gh-pages/index.xml

>>> True

>>>

# This window gives an option of what all you want to download. Once the download is complete, the window will return a true statement on the notebook

We now move towards three defining concepts of NLP: Tokenization, Stemming and Lemmatization.

Tokenization, Stemming and Lemmatization

Tokenization

In order to read and understand the sequence of words within the sentence, tokenization is the process that breaks the sequence into smaller units called tokens. These tokens can be words, numericals or at times be punctuation marks. Tokenization is also termed as word segmentation. This is a sample example of how Tokenization works:

- Input: Cricket, Baseball and Hockey are primarly hand-based sports.

- Tokenized Output: “Cricket”, “,”, “Baseball”, “and”, “Hockey”, “are”, “primarily”, “hand”, “based”, “sports”, “.”

The ending and starting of sentences are called word boundaries, and this process is meant for understanding the word boundaries of the given sentences.

- Sent_tokenize Package: This is sentence tokenization and converts the input into sentences. This package can be installed using this command in the Jupyter Notebook: | from nltk.tokenize import sent_tokenize |

- Word_tokenize Package: Similar to sentence tokenization, this package divides the input text to words. This package can be installed on Jupyter Notebook using command: | from nltk.tokenize import word_tokenize|

- WordPunctTokenizer package: In addition to the word tokenization, this package also works on punctuation marks as separators. Installation can be done using: | from nltk.tokenize import wordpunct_tokenize |

Stemming

When studying natural languages that humans use in conversations, there are variations that occur due to grammatical reasons. For instance, words like virtual, virtuality and virtualization all mean the same in an essence but mean different in varied sentences. For NLTK algorithms to work correctly, it is necessary for them to understand these variations. Stemming is a heuristic process that understands the word’s root form and helps in analyzing their meanings.

- PorterStemmer package: This package is built into Python and uses the Porter’s algorithm to compute stems. Functioning is something like this, an input word of ‘running’ produces a stemmed word ‘run’ post operating on this algorithm. It can be installed into the working environment with this command: | from nltk.stem.porter import PorterStemmer |

- LancasterStemmer package: The functionality of the lancaster stemmer is similar to Porter’s algortihm but has a lower level of strictness. It only removes the verb portion of the word from its source. For instance, the word ‘writing’ after running through the lancaster algorithm returns ‘writ’. It can be imported into the environment with this command: | from nltk.stem.lancaster import LancasterStemmer |

- SnowballStemmer package: This also works the same way like the other two and can be imported using the command: | from nltk.stem.snowball import SnowballStemmer | These algorithms have interchangeable use cases although they vary in strictness.

Lemmatization

Adding a morphological detail to words helps in extracting their respective base forms. This process is executed using lemmatization. Both, vocabulary and morphological analysis results in lemmatization. The aim of this procedure is to remove inflectional endings. The attained base form is called a lemma.

- WordNetLemmatizer package: The wordnet function extracts the word’s base form depending on whether the presented word is being used as a noun or pronoun. The package can be imported with the following statement: | from nltk.stem import WordNetLemmatizer |

Concepts of Data Chunking

Chunking as the name suggests is the process of dividing data into chunks. It is important in the Natural Language Processing realm. The primary function of chunking is to classify different parts of speech and short word phrases like noun phrases. Once tokenization is complete and input is divided into tokens, chunking labels these tokens for the algorithm to understand them better. There are two methodologies that are used for chunking and we will be reading about those below:

- Chunking Up: Going up or chunking upwards is zooming out on the problem. In the process of chunking up, the sentences become abstract and individual words and phrases of the input are generalized. For instance, a question like, ‘What is the purpose of a bus?’ after chunking up, will give the answer ‘Transport’

- Chunking Down: The opposite of chunking up, during downward chunking we move deeper into the language and objects become more specific. For instance, an example like, ‘What is a car?’ will yield in specific details like color, shape, brand, size, etc. of the car post being chunked down.

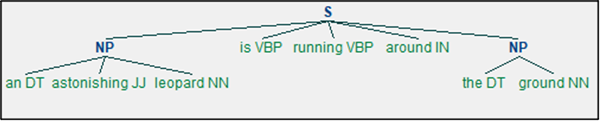

# We will be performing Noun-Phrase chunking in this example which is a category of chunking. Here we predefine grammatical notions that the program will use to perform the chunking.

import nltk

# The next step is to define the sentence’s structure.

# DT -> Determinant, VBP ->Verb, JJ -> Adjective, IN -> Preposition and NN -> Noun

test_phrases = [("an","DT"), ("astonishing","JJ"), ("leopard","NN"), ("is","VBP"), ("running","VBP"), ("around", "IN"), ("the","DT"),("ground","NN")]

# Chunking allows us to define the grammar as a regular expression

def_grammar = "NP:{

- ?*}" parse_chunk_tests = nltk.RegexpParser(def_grammar) parse_chunk_tests.parse(test_phrases)

- >>> This is the categorical output >>> Tree('S', [Tree('NP', [('an', 'DT'), ('astonishing', 'JJ'), ('leopard', 'NN')]), ('is', 'VBP'), ('running', 'VBP'), ('around', 'IN'), Tree('NP', [('the', 'DT'), ('ground', 'NN')])]) final_chunk_output = parse_chunk_tests.parse(test_phrases) final_chunk_output.draw()

>>>

# We see that the chunking has distinguished input phrases based on the grammar differentiation provided.

In the last section of this chapter, we will be implementing an NLP algorithm to examine text from a website and the model will then tell us what the article is about. This type of categorization is called topic modelling and is used in identification of patterns in underlying data.

Topic Modelling and Identifying Patterns in Data

Documents and discussions are generally always revolving around topics. The base of every conversation is one topic and discussions revolve around it. For NLP to understand and work on human conversations, it needs to derive the topic of discussion within the given input. To compute the same, algorithms run pattern matching theories on the input to determine the topic. This process is called topic modelling. It is used to uncover the hidden topics/core of documents that need processing. Topic modelling is used in the following scenarios:

- Text Classification: It can improve classification of textual data since modelling groups similar words, nouns, and actions together and does not use individual words as singular features.

- Recommender Systems: Systems based on recommendations rely on building a base of similar content. Therefore, recommender systems can be best utilized by topic modelling algorithms by computing similarity matrices from the given data.

# Let us now work out an example using NLP to read through a webpage and classify what the page is about

# Installing the NLTK package

pip install nltk

import nltk

nltk.download()

# Let us take a webpage link and use urllib module to crawl through the contents of this page.

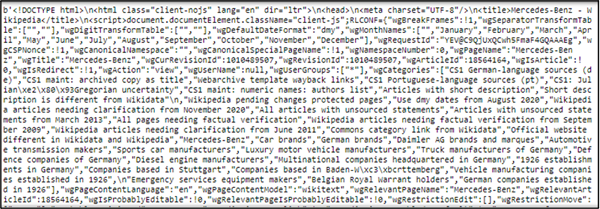

import urllib.request

test_resp = urllib.request.urlopen('https://en.wikipedia.org/wiki/Mercedes-Benz')

input_page = test_resp.read()

print(input_page)

>>>

# This is a static read-out of the webpage that was processed during crawling.

# We will now work with Beautiful Soup (a Python Library) that helps in cleaning the web text extracted from HTML and XML pages.

from bs4 import BeautifulSoup

soup_type = BeautifulSoup(input_page,'html5lib')

input_text = soup_type.get_text(strip = True)

print(input_text)

>>>

# This is now a grouped and clean output generated by BeautifulSoup

# These are now clean data chunks. The next step is to convert these chunks of text into tokens that the NLP algorithm can use.

build_tokens = [token for token in input_text.split()]

print(build_tokens)

>>>

# This output consists of tokenized words

# We now count the word frequency of the contents

# The FreqDist() function within NLTK works best for this purpose. We will preprocess the data by removing words like (at, the, a, for) that will not give meaning to the result.

from nltk.corpus import stopwords

in_request = stopwords.words('english')

clean_text = build_tokens[:]

for token in build_tokens:

if token in stopwords.words('english'):

clean_text.remove(token)

freq_dist = nltk.FreqDist(clean_text)

for k,v in freq_dist.items():

print(str(k) + ':' + str(v))

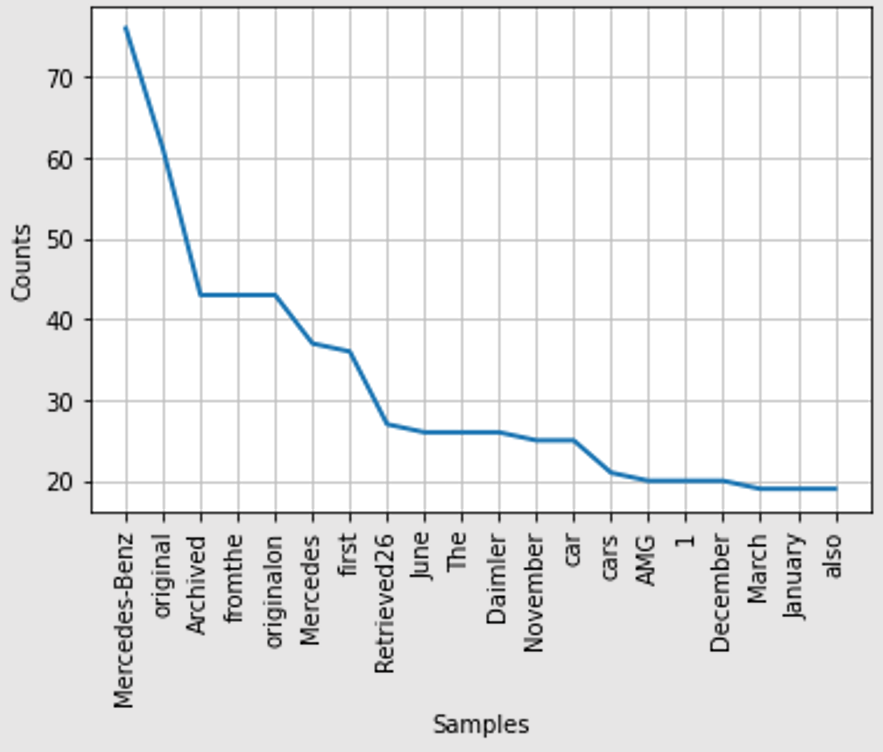

# Lastly, we plot this output into a graph that will visually tell us what topic is most talked about throughout this web page

freq_dist.plot(20, cumulative=False)

>>>

# The output clearly tells us that “Mercedes-Benz” is the key topic of discussion throughout this webpage

We have done a brief analysis of Natural Languages in this chapter and will be diving deep into more complex concepts in the coming chapters. This example of categorization helped us predicting what the contents of a webpage were, there are several such use cases where NLP is helpful.

Coming up Next

With Natural Language Processing, we have now made a move towards the depths on Artificial Intelligence. NLP is a vast topic and is important in mimicking human intelligence since language plays a very crucial role in our thought processes. A wide variety of use cases depend on processing of Natural Languages and its purpose is widespread across industries and domains.

In the coming chapters we will be going deeper into the practical uses of Natural Language Processing in today’s market and its influence on Artificial Intelligence.

- Working the Bag of Word (BOW) Problem

- Recognition of Speech

- Analysis of Sentiments through Language

-