Visual Understanding of the World

Computer Vision is a portion of Artificial Intelligence that deals with visual data. With the advent use of machine learning and deep learning models, computer systems today can work with digital images and videos to understand and emotionally identify the characteristics of the video’s contents. Computer Vision as a computational concept was originally seen in the 1950s where some neural networks were used to detect edges of objects (similar to what we saw in Chapter 11 – Edge Detection) which later advanced to handwritten text, speech, and languages. There are several convoluted use cases that justify the use of computer vision in today’s industry. Some very basic uses are, in an examination conducted online. A web camera can read expressions of the user to interpret their state of mind. This is also useful in testing emotional strength for pilots and race car drivers before they move to the cockpit for the final drive. Many robots today, including voice assistants like Alexa and Siri, are successfully able to mimic human behavior and speak back sentimentally. This also traces to cognitive therapy, that deals with stress and anxiety disorders among war veterans and equity traders, who are constantly under emotional strain.

Cognitive Science and Sentiment Analysis

Artificial Intelligence today has reached heights and lengths that were not imagined a few years back. Programs and computer systems can now mimic human behavior, reactions, and responses to a high level of accuracy. In this chapter we will try to understand Computer Vision, a branch of AI that is focused on image and video–based data interpretation and analysis. Before we get into its implementation, let us learn a little bit about Cognitive Science and Analysis of Sentiments.

Sentiment Analysis

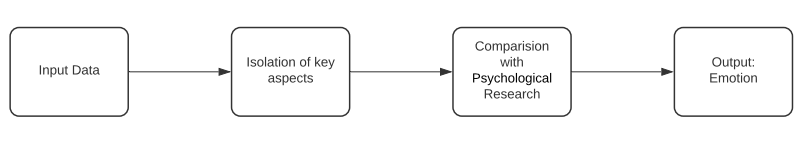

The analysis of human sentiments, which is also referred to as mining of opinions or Emotion AI in circumstances, is the study of different states of the human brain. Factors that are responsible for making sentiment analysis possible are natural language processing, computational linguistics, text mining and analysis of biometrics. We have already seen the implementation of Natural Language Processing (NLP) and Speech Processing in this domain, and in this chapter, we will be concentrating on a sub–branch of computational linguistics, i.e., facial expressions. The basic task of any sentiment analysis program is to isolate the polarity of the input (text, speech, facial expression, etc.) to understand whether the primary sentiment presented is positive, negative, or neutral. Based on this initial analysis, programs then often dig deeper to identify emotions like enjoyment, happiness, disgust, anger, fear, and surprise. There are two precursors to this analysis. One is the quantifying of the input data for algorithms to read and process, secondly, it’s the psychological research that helps in identifying which expression stands for what emotion.

All algorithms that enable sentiment analysis take reference of this above flow of events for evaluation. Let us know understand how images and visual data aids in analysis of sentiments by studying Cognitive Science and its applications to the Sentiment Analysis space.

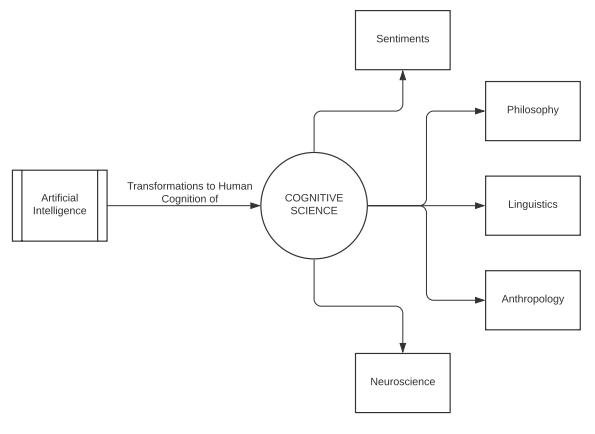

Cognitive Science

In terms of computing systems, cognitive science is the study of scientific processes that occur in a human brain. It is responsible for examining the functions of cognition, namely, perception of thoughts, languages, memory of the brain, reasoning and processing received information. At a broader level, it is the study of intelligence and behavior.

The goal of Cognitive Science is to study the human brain and understand its principles of intelligence. This is done with the hope that by building computer systems from the knowledge of human intelligence, machines will be able to mimic learning and develop intelligent behavior patterns like humans. Cognitive Science operates in three distinct levels of analysis:

- The computational theory: At this level, the goals of analysis are specified and fed to the computer system. This could be imitation of speech or understanding of emotions.

- Representation and algorithms: In general Machine Learning terms, this is the training stage. Here the ideal input and output scenarios are presented to the machine and algorithms are put in place that will ultimately be responsible for transforming input to output.

- The hardware implementation: This is the final cognition phase. It is the enactment of the algorithm in the real world and analysis of its working trajectory against a human brain.

Let us now move forward with these concepts and learn about the libraries and methods that will help in performing sentiment analysis using facial data.

Emotion Detection using Facial Expressions

It is often exclaimed that, our feelings at heart are reflected on the face. Expressions on the face are a vital mode of communication in humans as well as animals. Human behavior, psychological traits, are all easily studied using facial expressions. It is also widely used in medical treatments and therapies. In this section we will be working on images of facial expressions and portraits of faces to decode the sentiment presented in the image. In a later section, we will work on doing the same steps with a video–based input.

Face Emotion Recognizer

The Face Emotion Recognizer (generally knowns as the FER) is an open–source Python library built and maintained by

Justin Shenk and is used for sentiment analysis of images and videos. The project is built on a version that uses convolution neural network with weights mentioned in the HDF5 data file present in the

source code (The execution of FER can be found here) of this system’s creation model. This can be overridden by using the FER constructor when the model is called and initiated.

- MTCNN (multi cascade convolutional network) is a parameter of the constructor. It is a technique to detect faces. When it is set to ‘True’ the MTCNN model is used to detect faces, and when it is set to ‘False’ the function uses the default OpenCV Haarcascade classifier.

- detect_emotions(): This function is used to classify the detection of emotion and it registers the output into six categories, namely, ‘fear’, ‘neutral’, ‘happy’, ’sad’, ‘anger’, and ‘disgust’. Every emotion is calculated, and the output is put in the scale of 0 to 1.

Flow of Logic: The program starts by taking into input the image or video that needs analysis. The FER() constructor is initialized by giving it a face detection classifier (either Open CV Haarcascade or MTCNN). We then call this constructor’s detect emotions function by passing the input object (image or video) to it. The result achieved is an array of emotions with a value mentioned against each. Finally, the ‘top_emotion’ function can seclude the highest valued emotion of the object and return it. Dependencies for installing FER are OpenCV version 3.2 or greater, TensorFlow version 1.7 or greater and Python 3.6. Let us now see the implementation of this algorithm for images.

usr/bin/env python

# coding: utf–8

# We install the FER() library to perform facial recognition

# This installation will also take care of any of the above dependencies if they are missing

pip install fer

>>>

Downloading fer–20.1.3–py3–none–any.whl (810 kB)

Collecting keras

Downloading Keras–2.4.3–py2.py3–none–any.whl (36 kB)

Collecting opencv–contrib–python

Downloading opencv_contrib_python–4.5.1.48–cp38–cp38–win_amd64.whl (41.2 MB)

Requirement already satisfied: matplotlib in d:\users\anaconda\lib\site–packages (from fer) (3.3.2)

Collecting mtcnn>=0.1.0

Downloading mtcnn–0.1.0–py3–none–any.whl (2.3 MB)

Collecting tensorflow~=2.0

Downloading tensorflow–2.4.1–cp38–cp38–win_amd64.whl (370.7 MB)

from fer import FER

import matplotlib.pyplot as plt

%matplotlib inline

# Read the image from the directory

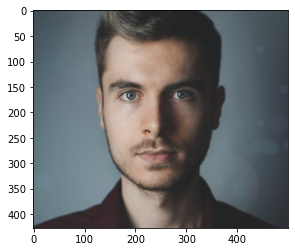

test_image_one = plt.imread("Image–One.jpeg")

# Create the FER() class object with mtcnn

emo_detector = FER(mtcnn=True)

# Capture all the emotions on the image

captured_emotions = emo_detector.detect_emotions(test_image_one)

# Print all captured emotions with the image

print(captured_emotions)

plt.imshow(test_image_one)

>>> [{'box': (94, 47, 293, 293), 'emotions': {'angry': 0.01, 'disgust': 0.0, 'fear': 0.0, 'happy': 0.01, 'sad': 0.01, 'surprise': 0.0, 'neutral': 0.97}}]

>>> <matplotlib.image.AxesImage at 0x13c1c5704c0>

>>>  # Use the top Emotion() function to call for the dominant emotion in the image

dominant_emotion, emotion_score = emo_detector.top_emotion(test_image_one)

print(dominant_emotion, emotion_score)

>>> neutral 0.97

# We repeat the same steps for a few other images to confirm the performance of FER()

test_image_two = plt.imread("Image–Two.jpg")

captured_emotions_two = emo_detector.detect_emotions(test_image_two)

print(captured_emotions_two)

plt.imshow(test_image_two)

dominant_emotion_two, emotion_score_two = emo_detector.top_emotion(test_image_two)

print(dominant_emotion_two, emotion_score_two)

>>> [{'box': (30, 48, 110, 110), 'emotions': {'angry': 0.0, 'disgust': 0.0, 'fear': 0.0, 'happy': 0.98, 'sad': 0.0, 'surprise': 0.0, 'neutral': 0.01}}]

>>> happy 0.98

>>>

# Use the top Emotion() function to call for the dominant emotion in the image

dominant_emotion, emotion_score = emo_detector.top_emotion(test_image_one)

print(dominant_emotion, emotion_score)

>>> neutral 0.97

# We repeat the same steps for a few other images to confirm the performance of FER()

test_image_two = plt.imread("Image–Two.jpg")

captured_emotions_two = emo_detector.detect_emotions(test_image_two)

print(captured_emotions_two)

plt.imshow(test_image_two)

dominant_emotion_two, emotion_score_two = emo_detector.top_emotion(test_image_two)

print(dominant_emotion_two, emotion_score_two)

>>> [{'box': (30, 48, 110, 110), 'emotions': {'angry': 0.0, 'disgust': 0.0, 'fear': 0.0, 'happy': 0.98, 'sad': 0.0, 'surprise': 0.0, 'neutral': 0.01}}]

>>> happy 0.98

>>>  # Testing on another image

test_image_three = plt.imread("Image–Three.jpg")

captured_emotions_three = emo_detector.detect_emotions(test_image_three)

print(captured_emotions_three)

plt.imshow(test_image_three)

dominant_emotion_three, emotion_score_three = emo_detector.top_emotion(test_image_three)

print(dominant_emotion_three, emotion_score_three)

>>> [{'box': (155, 193, 675, 675), 'emotions': {'angry': 0.01, 'disgust': 0.0, 'fear': 0.0, 'happy': 0.46, 'sad': 0.02, 'surprise': 0.0, 'neutral': 0.51}}]

>>> neutral 0.51

>>>

# Testing on another image

test_image_three = plt.imread("Image–Three.jpg")

captured_emotions_three = emo_detector.detect_emotions(test_image_three)

print(captured_emotions_three)

plt.imshow(test_image_three)

dominant_emotion_three, emotion_score_three = emo_detector.top_emotion(test_image_three)

print(dominant_emotion_three, emotion_score_three)

>>> [{'box': (155, 193, 675, 675), 'emotions': {'angry': 0.01, 'disgust': 0.0, 'fear': 0.0, 'happy': 0.46, 'sad': 0.02, 'surprise': 0.0, 'neutral': 0.51}}]

>>> neutral 0.51

>>>  # Testing on another image

test_image_four = plt.imread("Image–Four.jpg")

captured_emotions_four = emo_detector.detect_emotions(test_image_four)

print(captured_emotions_four)

plt.imshow(test_image_four)

dominant_emotion_four, emotion_score_four = emo_detector.top_emotion(test_image_four)

print(dominant_emotion_four, emotion_score_four)

>>> [{'box': (150, 75, 236, 236), 'emotions': {'angry': 0.84, 'disgust': 0.0, 'fear': 0.1, 'happy': 0.0, 'sad': 0.02, 'surprise': 0.01, 'neutral': 0.03}}]

>>> angry 0.84

>>>

# Testing on another image

test_image_four = plt.imread("Image–Four.jpg")

captured_emotions_four = emo_detector.detect_emotions(test_image_four)

print(captured_emotions_four)

plt.imshow(test_image_four)

dominant_emotion_four, emotion_score_four = emo_detector.top_emotion(test_image_four)

print(dominant_emotion_four, emotion_score_four)

>>> [{'box': (150, 75, 236, 236), 'emotions': {'angry': 0.84, 'disgust': 0.0, 'fear': 0.1, 'happy': 0.0, 'sad': 0.02, 'surprise': 0.01, 'neutral': 0.03}}]

>>> angry 0.84

>>>

We have now observed how images can be analyzed to retrieve the expressions and emotional states of people present in those images. In the next part, we will perform the same analysis using videos.

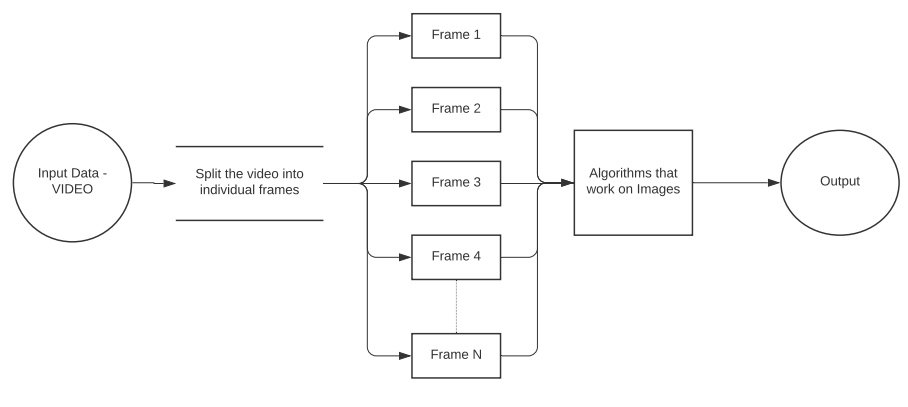

Working on Video for Emotion Detection

Similar to our processing of images for sentiment extraction, in this section we will work on videos. Theoritically, a video is a combination of continous image frames put together in motion. So essentially, the working of any algorithm for both videos and images is the same. The only additional step in processing videos, is splitting the video into all of its individual frames and then applying the image processing algorithms on it.

Flow of Logic: Although the underlying algorithm is similar for both images and videos, there are a few key changes that we will follow for videos.

- Video_analyze(): This function is responsible for extracting the individual image frames from a video and then analyze those independently.

- Each frame analyzed by this function is stored as a separate image by the algorithm in the root directory folder where the code is running. Also, this function later creates a replica of the original video by placing a box around the face and showing live emotions within the video.

- We then create a Pandas DataFrame from these analyzed values and plot this dataframe using matplotlib. In this plot we can see every emotion plotted against time.

- We can further analyze this dataframe by taking individual emotion values that were recognized by the model and finding which sentiment was dominant across the entire video.

This way, we are able to work on videos by extracting individual image frames and analyzing them. This process is displayed in the diagram below that shows how an additional step gets added for processing videos. We will be seeing this implementation in the section below.

# Working on the same concept with videos as input

# Import the necessary FER() packages for Video Processing

from fer import Video

from fer import FER

import os

import sys

import pandas as pd

# Put in the location of the video file that has to be processed

location_videofile = "C:/Users/v–singr/Downloads/Chapter 12/Video_One.mp4"

# Creating the Face detection detector

face_detector = FER(mtcnn=True)

# Input the video for processing

input_video = Video(location_videofile)

# The Analyze() function will run analysis on every frame of the input video.

# It will create a rectangular box around every image and show the emotion values next to that.

# Finally, the method will publish a new video that will have a box around the face of the human with live emotion values.

processing_data = input_video.analyze(face_detector, display=False)

>>>

Starting to Zip

Compressing: 8%

Compressing: 17%

Compressing: 25%

Compressing: 34%

Compressing: 42%

Compressing: 51%

Compressing: 60%

Compressing: 68%

Compressing: 77%

Compressing: 85%

Compressing: 94%

Zip has finished

# We will now convert the analysed information into a dataframe.

# This will help us export the data as a .CSV file to perform analysis over it later

vid_df = input_video.to_pandas(processing_data)

vid_df = input_video.get_first_face(vid_df)

vid_df = input_video.get_emotions(vid_df)

# Plotting the emotions against time in the video

pltfig = vid_df.plot(figsize=(20, 8), fontsize=16).get_figure()

>>>

.png.aspx;?width=600&height=243) # We will now work on the dataframe to extract which emotion was prominent in the video

angry = sum(vid_df.angry)

disgust = sum(vid_df.disgust)

fear = sum(vid_df.fear)

happy = sum(vid_df.happy)

sad = sum(vid_df.sad)

surprise = sum(vid_df.surprise)

neutral = sum(vid_df.neutral)

emotions = ['Angry', 'Disgust', 'Fear', 'Happy', 'Sad', 'Surprise', 'Neutral']

emotions_values = [angry, disgust, fear, happy, sad, surprise, neutral]

score_comparisons = pd.DataFrame(emotions, columns = ['Human Emotions'])

score_comparisons['Emotion Value from the Video'] = emotions_values

score_comparisons

>>>

Human Emotions Emotion Value from the Video

0 Angry 76.51

1 Disgust 0.02

2 Fear 154.88

3 Happy 162.85

4 Sad 91.33

5 Surprise 28.19

6 Neutral 68.25

# We will conclude the project by experimenting the code on another video

location_videofile_two = "C:/Users/v–singr/Downloads/Chapter 12/Video_Two.mp4"

input_video_two = Video(location_videofile_two)

processing_data_two = input_video_two.analyze(face_detector, display=False)

vid_df_2 = input_video_two.to_pandas(processing_data_two)

vid_df_2 = input_video_two.get_first_face(vid_df_2)

vid_df_2 = input_video_two.get_emotions(vid_df_2)

pltfig = vid_df_2.plot(figsize=(20, 8), fontsize=16).get_figure()

>>>

angry_2 = sum(vid_df_2.angry)

disgust_2 = sum(vid_df_2.disgust)

fear_2 = sum(vid_df_2.fear)

happy_2 = sum(vid_df_2.happy)

sad_2 = sum(vid_df_2.sad)

surprise_2 = sum(vid_df_2.surprise)

neutral_2 = sum(vid_df_2.neutral)

emotions_values_2 = [angry_2, disgust_2, fear_2, happy_2, sad_2, surprise_2, neutral_2]

score_comparisons = pd.DataFrame(emotions, columns = ['Human Emotions'])

score_comparisons['Emotion Value from the Video'] = emotions_values_2

score_comparisons

>>>

Human Emotions Emotion Value from the Video

0 Angry 21.02

1 Disgust 0.00

2 Fear 32.47

3 Happy 137.04

4 Sad 77.69

5 Surprise 13.09

6 Neutral 176.34

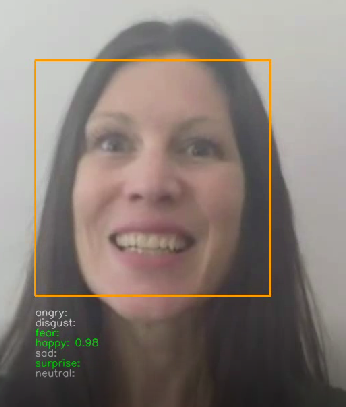

# For instance, this is how one of the portions of the final analyzed video look like.

# All these are saved to the folder where the code runs.

>>>

# We will now work on the dataframe to extract which emotion was prominent in the video

angry = sum(vid_df.angry)

disgust = sum(vid_df.disgust)

fear = sum(vid_df.fear)

happy = sum(vid_df.happy)

sad = sum(vid_df.sad)

surprise = sum(vid_df.surprise)

neutral = sum(vid_df.neutral)

emotions = ['Angry', 'Disgust', 'Fear', 'Happy', 'Sad', 'Surprise', 'Neutral']

emotions_values = [angry, disgust, fear, happy, sad, surprise, neutral]

score_comparisons = pd.DataFrame(emotions, columns = ['Human Emotions'])

score_comparisons['Emotion Value from the Video'] = emotions_values

score_comparisons

>>>

Human Emotions Emotion Value from the Video

0 Angry 76.51

1 Disgust 0.02

2 Fear 154.88

3 Happy 162.85

4 Sad 91.33

5 Surprise 28.19

6 Neutral 68.25

# We will conclude the project by experimenting the code on another video

location_videofile_two = "C:/Users/v–singr/Downloads/Chapter 12/Video_Two.mp4"

input_video_two = Video(location_videofile_two)

processing_data_two = input_video_two.analyze(face_detector, display=False)

vid_df_2 = input_video_two.to_pandas(processing_data_two)

vid_df_2 = input_video_two.get_first_face(vid_df_2)

vid_df_2 = input_video_two.get_emotions(vid_df_2)

pltfig = vid_df_2.plot(figsize=(20, 8), fontsize=16).get_figure()

>>>

angry_2 = sum(vid_df_2.angry)

disgust_2 = sum(vid_df_2.disgust)

fear_2 = sum(vid_df_2.fear)

happy_2 = sum(vid_df_2.happy)

sad_2 = sum(vid_df_2.sad)

surprise_2 = sum(vid_df_2.surprise)

neutral_2 = sum(vid_df_2.neutral)

emotions_values_2 = [angry_2, disgust_2, fear_2, happy_2, sad_2, surprise_2, neutral_2]

score_comparisons = pd.DataFrame(emotions, columns = ['Human Emotions'])

score_comparisons['Emotion Value from the Video'] = emotions_values_2

score_comparisons

>>>

Human Emotions Emotion Value from the Video

0 Angry 21.02

1 Disgust 0.00

2 Fear 32.47

3 Happy 137.04

4 Sad 77.69

5 Surprise 13.09

6 Neutral 176.34

# For instance, this is how one of the portions of the final analyzed video look like.

# All these are saved to the folder where the code runs.

>>>

With this we conclude the analysis of both images and videos to perform Emotion Recognition. We were able to successfully work with human faces and understand sentiments shown in facial expressions.

Project Summary, Conclusions and Next Up

Sentiment Analysis and Face Detection, individually have a numerous number of use–cases in today’s world. We see object detection algorithms in public parking lots, traffic monitoring systems, etc. that take images of people driving vehicles to keep record. Sentiment Analysis is furthermore used in therapy where physical meetings of the therapist and their patient is not possible. The study of human cognition has also evolved medicines. On the technological front, virtual assistants, profile evaluation assistants and automation bots are built to mimic the actions of humans and replace them with the hope of increasing accuracy and decreasing errors. It is therefore a very important part of the Artificial Intelligence inspired world we live in today. A more engrossing and complicated approach to computer vision is by using cloud–based algorithms like Azure Cognitive Services or Deep Learning mechanisms, that we have not covered in this course, but could come in handy for complex scenarios. Through our study of the last two chapters, we have learned the below:

- Cognitive Science is the study of human thought processes and aims at delivering human reactions and emotions to machines through algorithms.

- Computer Vision in the branch of Artificial Intelligence that focuses on implementing Cognitive Science in the real world by working with human data in the form of images.

- Image Processing is a part of all Computer Vision algorithms that helps algorithms understand images, process them, work with them as numeric vectors and perform necessary operations.

As part of this chapter and the one we completed before this, we used the power of Artificial Intelligence to work on Cognitive Science and dealt with human faces. The branch is generally referred to as Computer Vision. We were able to extract emotions out of photos and videos of human faces. We are now coming towards the end of our series of tutorials in Python Programming for AI. In the coming chapter, we will be implementing a full–fledged algorithm to work on Artificial Intelligence and see several factors of AI and ML in action. Towards the end, I will be discussing the trends in the market today and how AI is powering businesses in the present era.

In the coming chapters, we will try and look at the below concepts in the realm of Artificial Intelligence:

- Implementing AI in Python Project

- Emergence of AI in the world

- Cloud–Ready applications for High Compute AI